Two-way GAN¶

Cycle-consitenty Loss is good for color and texture, but not very succesfull on shape change. For transfer with shape, could check UNIT and its variants

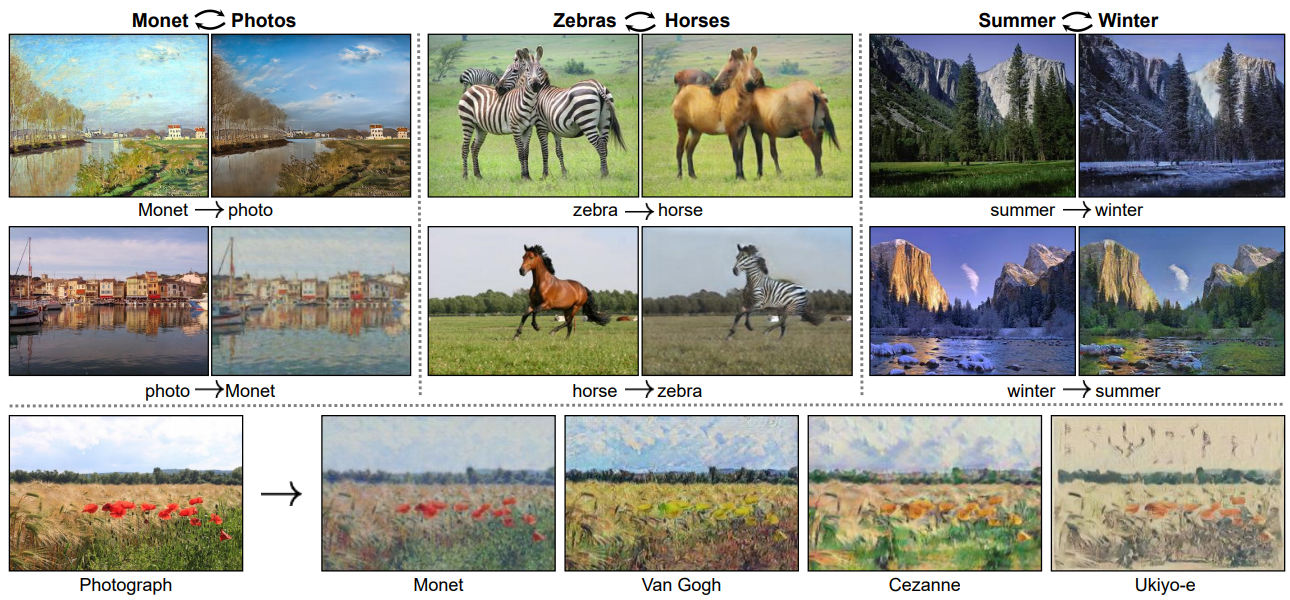

CycleGAN¶

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks (ICCV 2017) by Jun-Yan Zhu, Taesung Park, Phillip Isola, Alexei A. Efros

Project|

Torch |

pyTorch |

CVPR2018 slides

Could be applyed on any unapired datasets (better if two datasets share similar visual content)

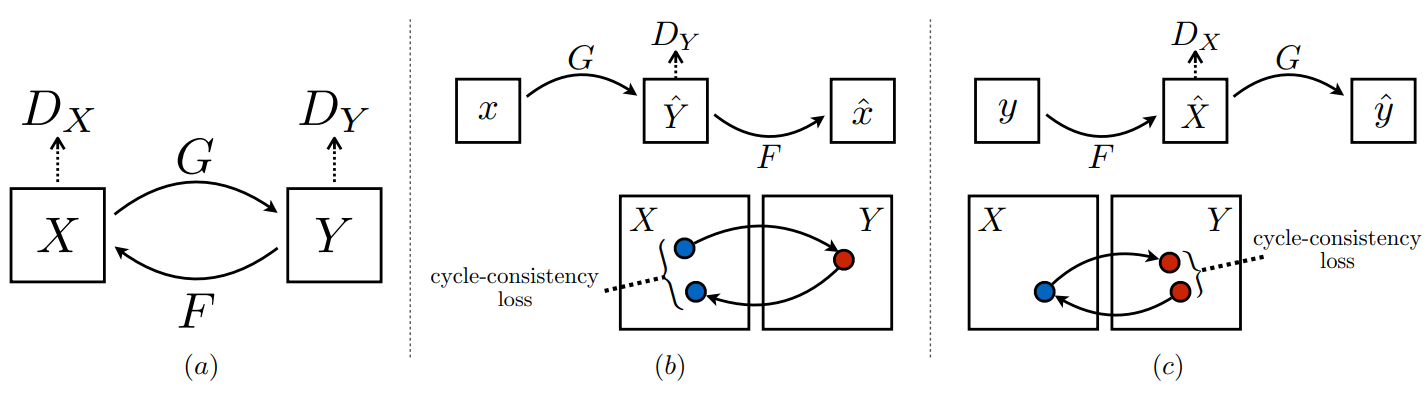

Adversarial Loss

Adversarial Loss

Cycle Consistency Loss

Full Objective

my testing¶

testing cycleGAN good at color and texture changes, bad at shape

Other two-way GAN¶

Dual GAN: DualGAN: Unsupervised Dual Learning for Image-to-Image Translation (ICCV 2017)

DiscoGAN: Learning to Discover Cross-Domain Relations with Generative Adversarial Networks (ICML 2017)

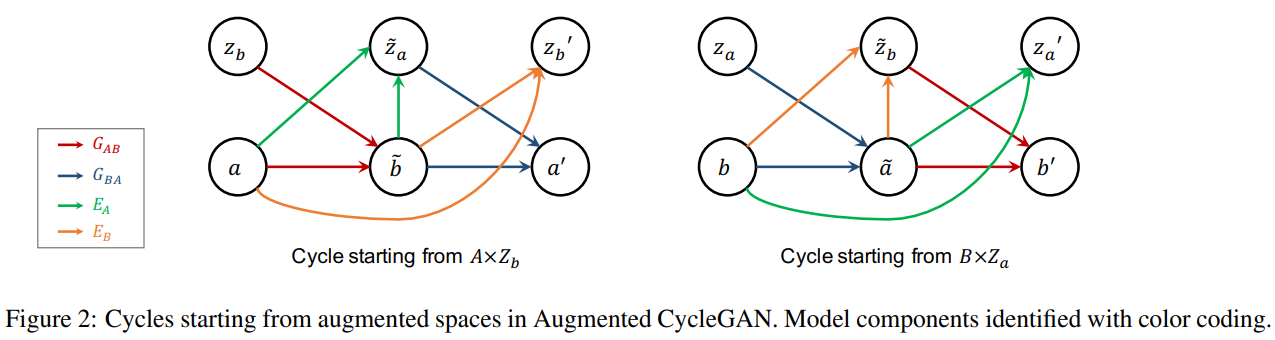

Augmented CycleGAN¶

Augmented CycleGAN: Learning Many-to-Many Mappings from Unpaired Data (ICML 2018)

pyTorch (Python2, pyTorch 0.3) | Theano re-implementation Apart from generator, also have 2 encoders \(E_A: A \times B → Z_a, E_B: B \times A → Z_b\) which enable optimization of cycle-consistency with stochastic, structured mapping

Apart from generator, also have 2 encoders \(E_A: A \times B → Z_a, E_B: B \times A → Z_b\) which enable optimization of cycle-consistency with stochastic, structured mapping

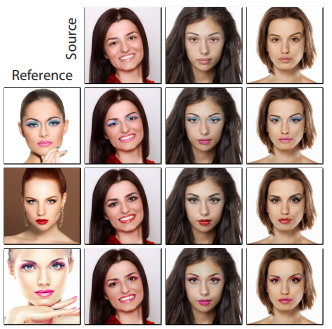

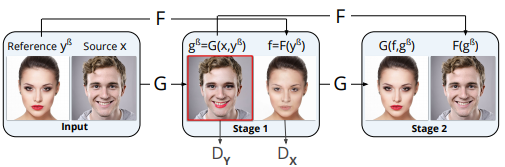

Paired CycleGAN¶

PairedCycleGAN: Asymmetric Style Transfer for Applying and Removing Makeup (CVPR 2018)

Could apply specified style from input_reference to input_source, as a one-to-many transformation

pre-train makeup removal function F(many-to-one) with CycleGAN first, then alternate the training of makeup transfer function G (one-to-many)

Note: Consider as conditional GAN with (input source + input reference) as conditions

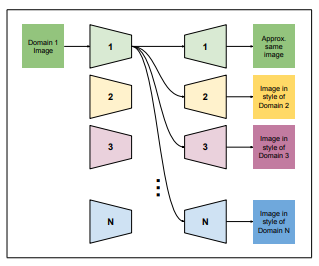

ComboGAN¶

ComboGAN: Unrestrained Scalability for Image Domain Translation (CVPR 2018)

encoder-decoder pairs that share the latent coding.

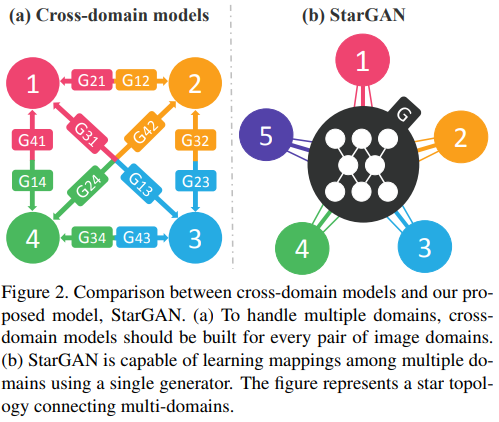

StarGAN¶

StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation (CVPR 2018)

pyTorch code

input mask vector, an one-shot label, the target domain as second input condition

StarGANv2¶

StarGAN v2: Diverse Image Synthesis for Multiple Domains (CVPR 2020) | Github stargan-v2 | Youtube | Twitter | Reddit

Goal: train a single generator G that can generate diverse images of each domain y that corresponds to the image x. We generate domain-specific style vectors in the learned style space of each domain and train G to reflect the style vectors Replace its domain label with our proposed domains pecific style code that can represent diverse styles of a specific domain with 2 modules

- mapping network: learns to transform random Gaussian noise into a style code

- style encoder: learns to extract the style code from a given reference image

Comparison of above 4 conditional variants¶

| GAN | condition apply to | the amount of generator/encoder | input |

|---|---|---|---|

| Augmented CycleGAN | within domain | 2 generators | source image + encoding |

| Paired CycleGAN | within domain | 2 generators | source image + reference image |

| UNIT, ComboGAN | cross-domains | encoder-decoder pairs | source image (to target encoder) |

| StarGAN | cross-domains | 1 generators | source image + label of target domain |