Transfer Learning¶

How transferable are features in deep neural networks? (NIPS 2014)

Example: Given labelled grey-scaled MNIST and unlabeled color MNIST, want to train model for classifier of color MNIST without labelling color MNIST.

Embedding¶

- word embedding in NLP, such as word2vec

- face embedding in image, such as faceNet

- etc…

STL¶

Self-taught learning: transfer learning from unlabeled data (NIPS 2007)

train classifier with feature representation (e.g. with auto-encoder)

DaNN (PRICAI 2014)¶

Domain Adaptive Neural Networks for Object Recognition (PRICAI 2014)

use Maximum Mean Discrepancy (MMD) as regularization to reduce the distribution mismatch between the source and target domains in the latent space.

DDC¶

Deep domain confusion: Maximizing for domain invariance (2014)

adaptation layer along with a domain confusion loss based on MMD

deeper than DaNN

Distillistion¶

Train big model first, then use it as teacher to teach small model (with faster inference speed)

- Do Deep Nets Really Need to be Deep (NIPS 2014) learn value before softmax, could add some unlabelled data

- Distilling the Knowledge in a Neural Network(NIPS 2014) learn soft target Better than training small model with labelled data directly. Probably because distillisaton prevent overfit

Feature Mimicking¶

Mimicking Very Efficient Network for Object Detection (CVPR 2017)

DAN¶

Learning Transferable Features with Deep Adaptation Networks (ICML 2015)

multi adaptation layers, multi-MMD (A Kernel Two-Sample Test (JMLR 2012))

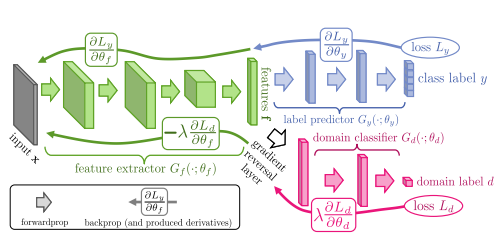

DANN¶

Domain-Adversarial Neural Networks (NIPS 2014) - Hana Ajakan

Unsupervised Domain Adaptation by Backpropagation (ICML 2015) - Yaroslav Ganin

Domain-Adversarial Training of Neural Networks (JMLR 2016) - Yaroslav Ganin, Hana Ajakan

| module | function |

|---|---|

| feature extractor | model to be transfered and tunned |

| label predictor | predict output |

| doman classifier | identify if target input within source input domain. If clasifier distinguish as new domain, high loss-> force feature extractor learn to mix 2 domain |

DSN¶

Transferring GANs¶

Transferring GANs: generating images from limited data (ECCV 2018)

DNI¶

Deep Network Interpolation for Continuous Imagery Effect Transition (CVPR 2019) - CUHK + SenseTime

Project | github: pyTorch (the last section of readme)

Network interpolation strategy also used in ablation Study of ESRGAN. It is less costly comparing to ablation via tuning loss weight.