Recurrent Neural Network - RNN¶

\[h_t=f(Wx_t+U h_{t-1}+b)\]

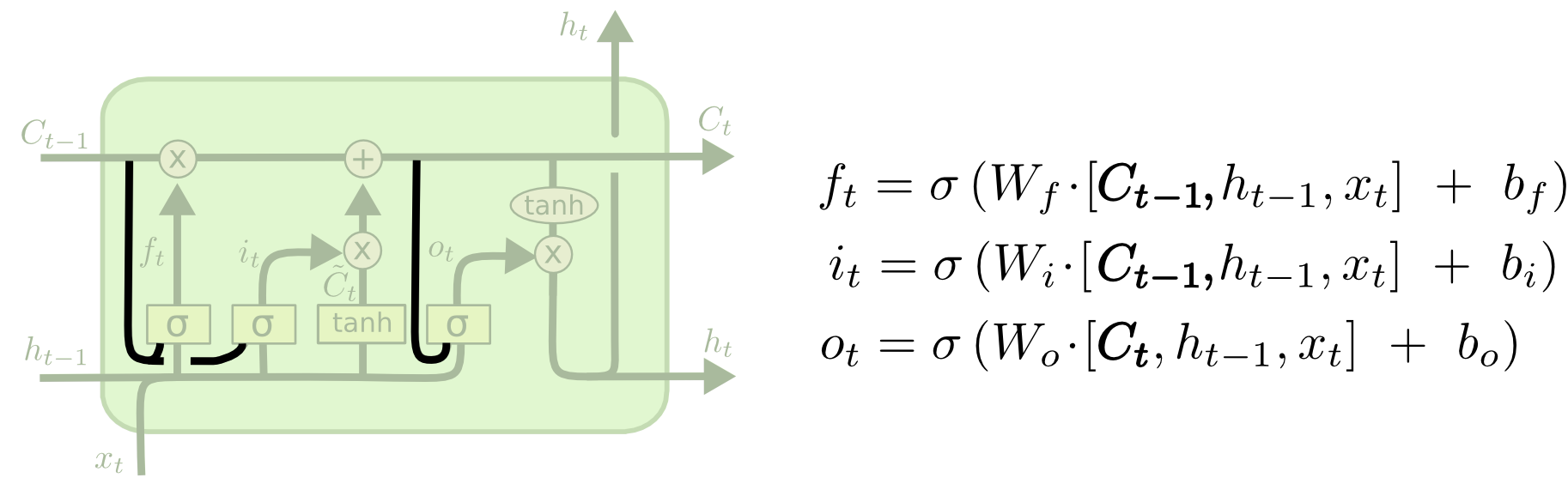

LSTM¶

Long Short-term Memory (Neural Computation 1997)

vanilla RNNs gradients vanish or exploded during back-propagation.

learning Long-Term Dependencies via cell state $C$

Understanding LSTM Networks – colah’s blog

GRU¶

Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling (NIPS 2014)

simplified variant of LSTM

FC-LSTM¶

Learning precise timing with lstm recurrent networks (JMLR 2003), Generating Sequences With Recurrent Neural Networks (2013)

fully connected LSTM with peephole connection (add $C$ parameter into calucation of $f_t$, $i_t$ and $o_t$ from colah

from colah