Improve training stability¶

LSGAN¶

Least Squares Generative Adversarial Networks

adopt least squares loss function for the discriminator, which yeilds minimizing the Pearson x^2 divergence to enforce the fake samples toward the decision boundary

According to Gapeng , google study, ajolicoeur/cats seems LS loss do not improve result, but DeblurGANv2 using it. I guess least-square loss is suitable for restoration/ enhancement, but not generative task.

WGAN (ICML 2017)¶

Towards Principled Methods for Training Generative Adversarial Networks

Wasserstein GAN

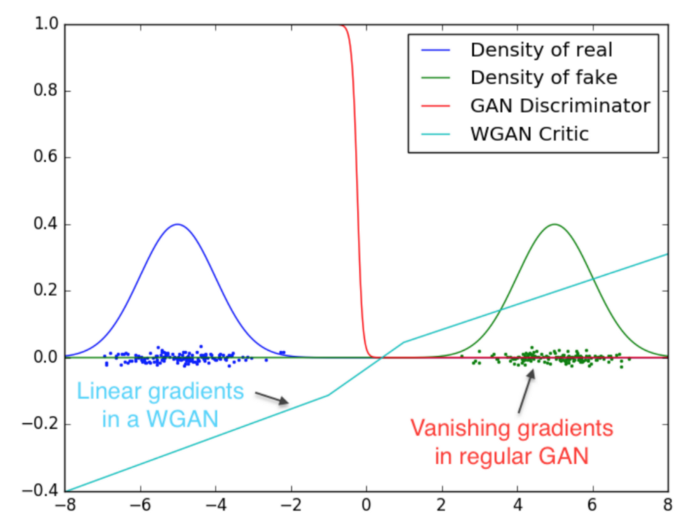

improve the stability of learning by using Wasserstein-1 distance.

Note: WGAN aims for stabilize the GAN without tweaking, not improving output.

Wasserstein distance= Earth-Mover(EM) distance¶

KL, JS divegence is discontinue, not stable when training nerual network; introduce WS divegence, which is continues.

the minimum cost of transporting mass in converting the data distribution q to the data distribution p

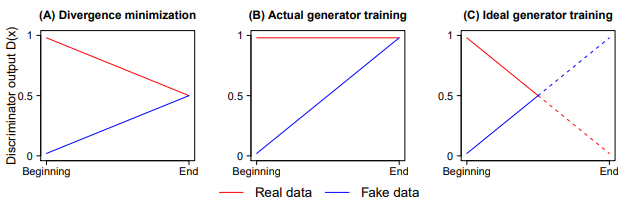

solve: vanishing gradients in GAN casued by good discriminator + bad generator

Apart from Wasserstein-1 distance:¶

- No log in the loss. The output of D is no longer a probability, hence we do not apply sigmoid at the output of D

- Clip the weight of D (0.01)

- Train D more than G (5:1)

- Do not use optimator based on momentum. Use RMSProp instead of ADAM. (SGD is also okay)

- Lower learning rate (0.00005)

WGAN-GP (NIPS 2017)¶

Improved Training of Wasserstein GANs WGAN issue: weight clipping to force continues contrib: gradient penalty

- do NOT use batch noramlization

SN-GAN (ICLR 2018)¶

Spectral Normalization for Generative Adversarial Networks

One of the challenges in the study of generative adversarial networks is the instability of its training. In this paper, we propose a novel weight normalization technique called spectral normalization to stabilize the training of the discriminator. Our new normalization technique is computationally light and easy to incorporate into existing implementations. We tested the efficacy of spectral normalization on CIFAR10, STL-10, and ILSVRC2012 dataset, and we experimentally confirmed that spectrally normalized GANs (SN-GANs) is capable of generating images of better or equal quality relative to the previous training stabilization techniques.

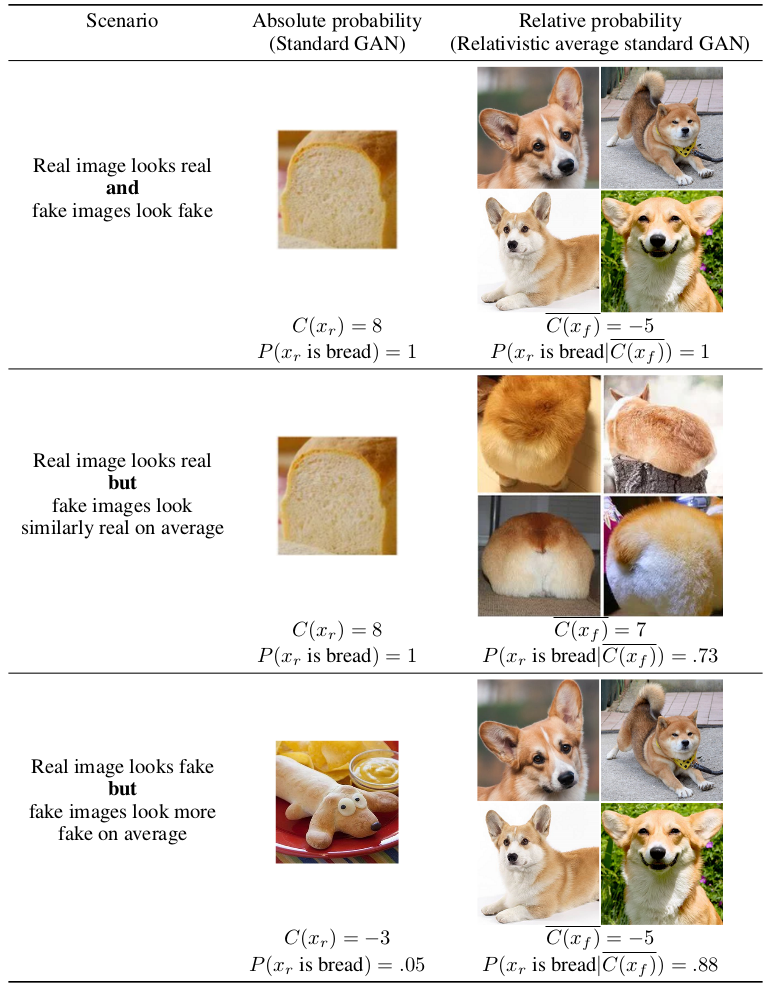

Relativistic Discriminator (ICLR 2019)¶

The relativistic discriminator: a key element missing from standard GAN

Relativistic GAN – Alexia Jolicoeur-Martineau | reddit

also called: RaGAN

Aim to stablize the training process of GAN