Inception v1¶

Going Deeper with Convolutions (ILSVRC 2014)

By adding auxiliary classifiers connected to these intermediate layers, we would expect to encourage discrimination in the lower stages in the classifier, increase the gradient signal that gets propagated back, and provide additional regularization.

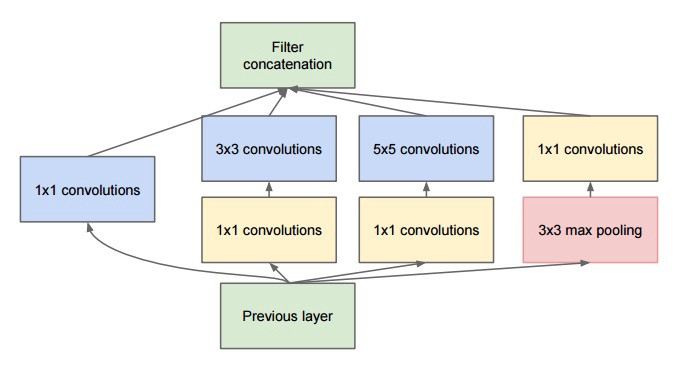

Application: GoogLeNet

Application: GoogLeNet

Inception v2¶

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift (ICML 2015), normalization/batch normalization

Inception v3¶

Rethinking the Inception Architecture for Computer Vision (CVPR 2016)

Factorizing Convolutions with Large Filter Size¶

Factorization into smaller convolutions¶

computation of conv 5x5 > 3x3+3x3

Spatial Factorization into Asymmetric Convolutions¶

3x3 > 3x1+1x3

Rethinking the Utility of Auxiliary Classifiers¶

Auxiliary classifiers did not improve convergence early in the training. Near the end of training, the network with the auxiliary branches starts to overtake the accuracy of the network without any auxiliary branch and reaches a slightly higher plateau. The hypothesis of v1 that auxiliary classifier help evolving the low-level features is most likely misplaced. Auxiliary classifiers act as regularizer.

Inception v4, Inception-ResNet¶

Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning (AAAI 2017)

Pure Inception blocks¶

keep tuning…

residual scaling¶

stabilize training, prevent network died early. scale down the residual before element-wise sum with scale 0.1~0.3 Note: In other words, enforce the residual block learn x3~10 of residual value. It is how residual scaling avoid died?