Optimizer¶

Gradient Descent¶

Trade-off between accuracy and time to update¶

- Batch gradient descent

- Stochastic gradient descent, SGD

- Mini-batch gradient descent

Momentum¶

Momentum add add a fraction \(\gamma\) of the update factor

Second-order¶

AdaGrad¶

Adaptive Subgradient Methods for Online Learning and Stochastic Optimization (JMLR 2011)

adaptively tune learning rate based on the previous gradient

If the gradient of weight always small, amplify the learning rate.

If the gradient of weight always large, decrease the learning rate.

works well for sparse gradients

weakness: learning rate shrink to zero

AdaDelta¶

ADADELTA: An Adaptive Learning Rate Method (2012)

simplify AdaGrad to overcome the continual decay of learning rate

Accumulate over window (moving average) \(E[x]_t=\rho E[x]_{t-1}+(1-\rho)x_t\)

Root Mean Square: \( RMS[x]_t = \sqrt{E[x^2]_t+\epsilon} \)

RMSprop¶

Root Mean Square Propagation, concurrent work of AdaDelta

Adam¶

Adam: A Method for Stochastic Optimization (ICLR 2015)

Adaptive Momentum

RMSProp + Momentum + running average + bias-correction

AdaMax¶

Drawback¶

The Marginal Value of Adaptive Gradient Methods in Machine Learning (NIPS 2017) suggest use SGD(+momentum) over adaptive methods (AdaGrad, RMSProp, and Adam). Adam is fast but generalize worse than fine-tuned SGD. reddit discussion

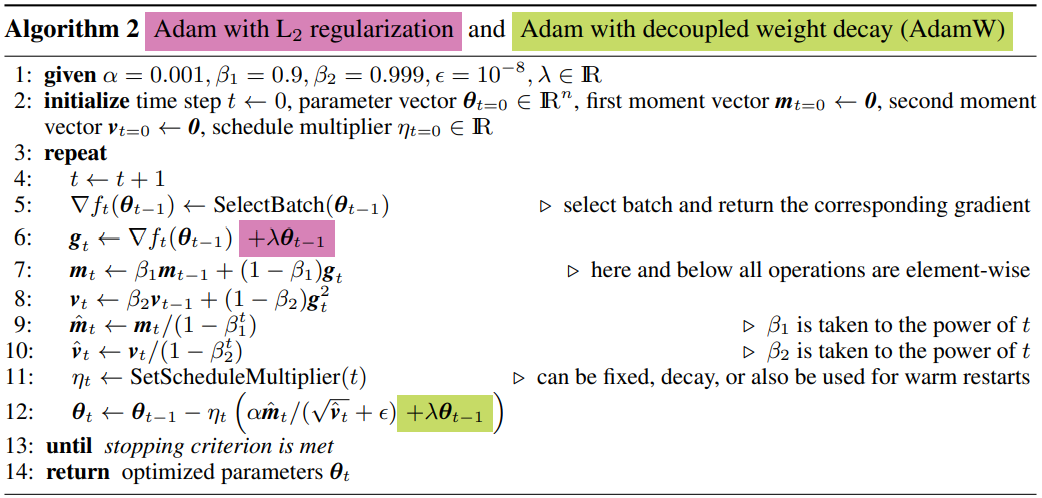

AdamW¶

Decoupled Weight Decay Regularization (ICLR 2019)

- \(L_2\) regularization and weight decay are not identical. The two techniques can be made equivalent for SGD by a reparameterization of the weight decay factor based on the learning rate; however, as is often overlooked, this is not the case for Adam. In particular, when combined with adaptive gradients, L2 regularization leads to weights with large historic parameter and/or gradient amplitudes being regularized less than they would be when using weight decay.

- \(L2\) regularization is not effective in Adam. One possible explanation why Adam and other adaptive gradient methods might be outperformed by SGD with momentum is that common deep learning libraries only implement L2 regularization, not the original weight decay. Therefore, on tasks/datasets where the use of L2 regularization is beneficial for SGD (e.g., on many popular image classification datasets), Adam leads to worse results than SGD with momentum (for which L2 regularization behaves as expected).

- Weight decay is equally effective in both SGD and Adam. For SGD, it is equivalent to L2 regularization, while for Adam it is not.

- Optimal weight decay depends on the total number of batch passes/weight updates. Our empirical analysis of SGD and Adam suggests that the larger the runtime/number of batch passes to be performed, the smaller the optimal weight decay.

- Adam can substantially benefit from a scheduled learning rate multiplier. The fact that Adam is an adaptive gradient algorithm and as such adapts the learning rate for each parameter does not rule out the possibility to substantially improve its performance by using a global learning rate multiplier, scheduled, e.g., by cosine annealing.

Cyclical Learning Rates¶

Cyclical Learning Rates for Training Neural Networks (WACV 2017)

Instead of monotonically decreasing the learning rate, this method lets the learning rate cyclically vary between reasonable boundary values

soft restart

LR Range test¶

ractically eliminates the need to experimentally find the best values and schedule for the global learning rates

Note: Even though some said the performance of cyclical learning rate is not significant, the concept of LR range test used for other cycle/restart based optimizer

SGDR¶

SGDR: Stochastic Gradient Descent with Warm Restarts (ICLR 2017)

SGD with cosine annealing hard restart

avoid local minimium, useful in many model, e.g. EDVR use Adam Optimizer with SGDR as learning rate scheduler