Motion Retargeting¶

LCM¶

Learning Character-Agnostic Motion for Motion Retargeting in 2D (SIGGRAPH 2019) - Kfir Aberman

Project | pyTorch 0.4

Train a deep neural network to decompose temporal sequences of 2D poses into three components: motion, skeleton, and camera view-angle. Having extracted such a representation, we are able to re-combine motion with novel skeletons and camera views, and decode a retargeted temporal sequence.

Pose transfer/ performance cloning is not focus of this paper. It apply Deep Video-Based Performance Cloning directly with skeleton retarget instead of global scaling.

dataset: synthetic paired data

EDN¶

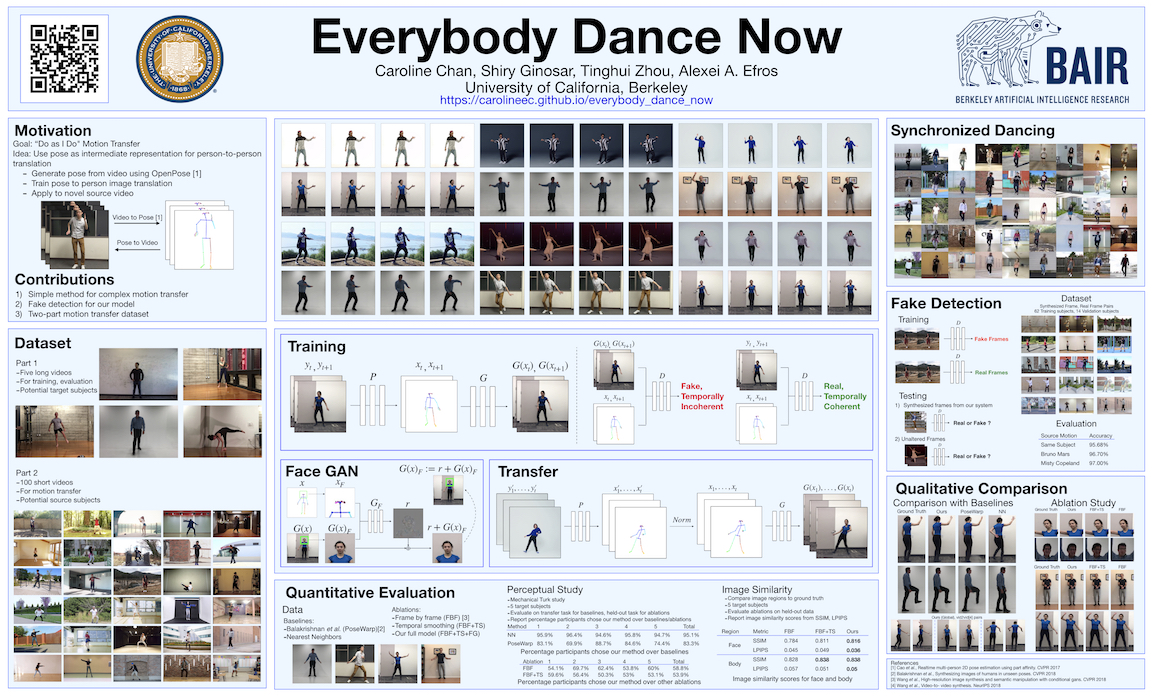

Everybody dance now (ICCV 2019)

Project | [not available for public] | reproduce in pyTorch

concurrent with vid2vid and LCM

Pose Encoding and Normalization¶

Encoding body poses + Global pose normalization

Pose to Video Translation¶

Frame-by-frame synthesis + Temporal smoothing + Face GAN

Dataset¶

- target(person): five long single-dancer videos that can be used to train and evaluate our (personalized) model

- source(motion): large collection of short YouTube videos that can be used for transfer and fake detection.

TransMoMo¶

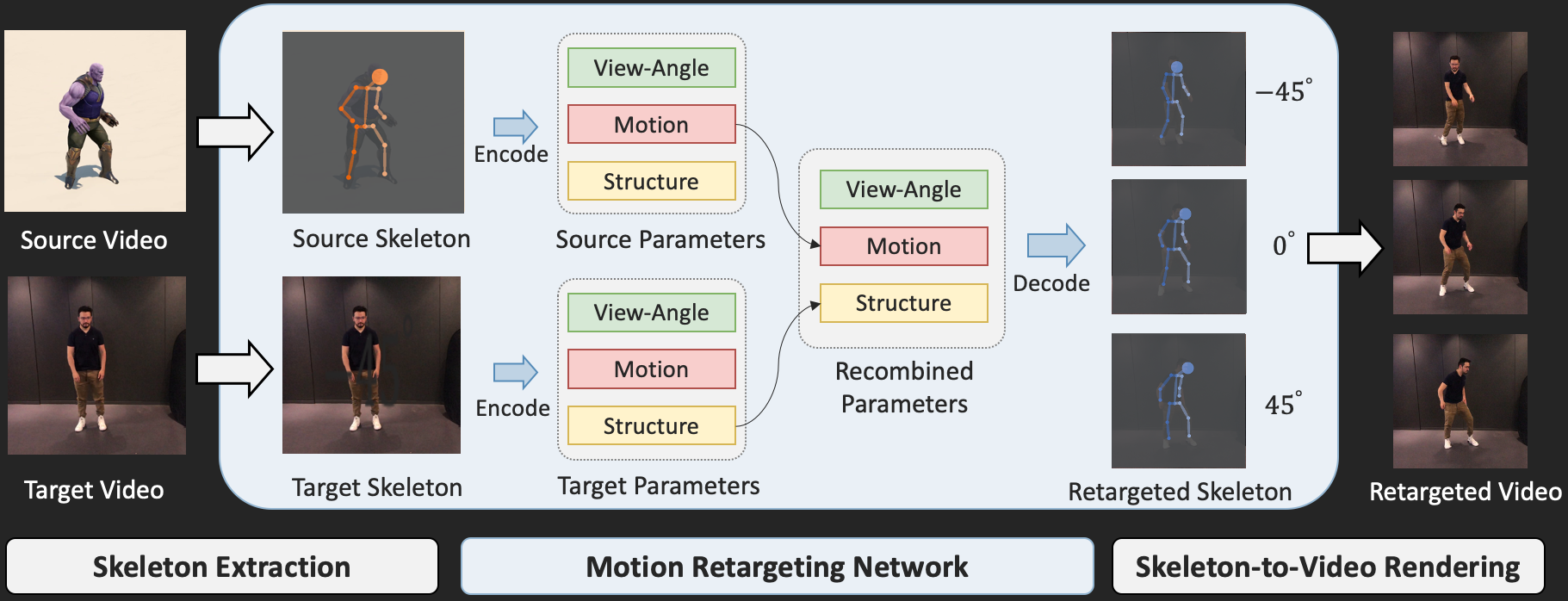

TransMoMo: Invariance-Driven Unsupervised Video Motion Retargeting (CVPR 2020)

Project | pyTorch

- Skeleton Extraction: OpenPose or DensePose

- Motion Retargeting Network

- Skeleton-to-Video Rendering: Everybody Dance Now

comparing to LCM¶

- LCM use synthetic paired data, TransMoMo is pure unlabeled web data