AutoEncoder¶

AutoEncoder, AE¶

Autoencoder-Deep Learning

Applications:

- Dimensionality reduction Reducing the dimensionality of data with neural networks (Science 2006)

- Information retrieval via semantic hashing: produce a code that is low-dimensional and binary, then stored in hash table. 1. easily return entries with same binary code and search similar (or less similar) entries efficiently

- Denoising, inpaint task

VAE¶

Auto-encoding variational bayes (ICLR 2014)

How to apply autoencoder to generative task like GAN? Variantional AutoEncoder

- enforce the posterior distribution of latent representation \(p(z|x)\) follows standard distribution

- encoder (MLP) compute mean vector \(\mu\) and standard deiation vector \(\sigma\), combine them to sampled latent vector \(z\)

Re-parameterization Trick¶

adding noise that follow standard distribution to latent space in order to backpropagate through a random node

result: improve latent space that not accessable with samples in dataset

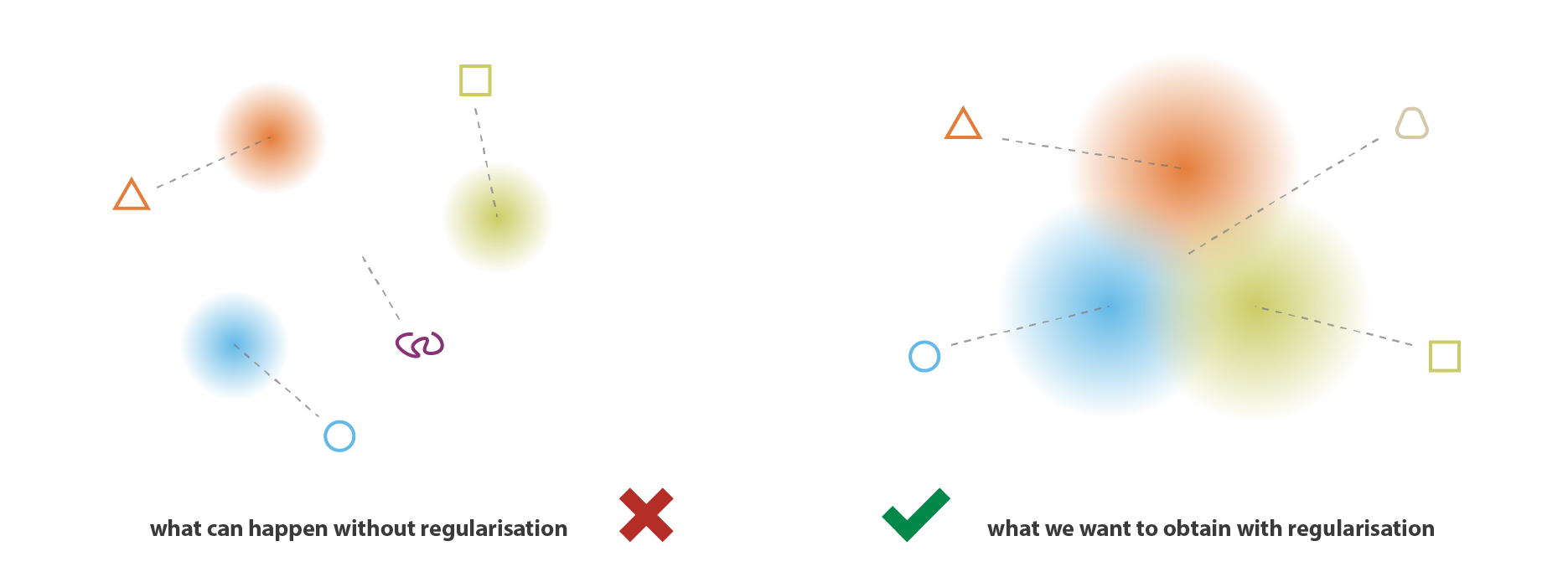

from Understanding Variational Autoencoders (VAEs) - Towards Data Science

from Understanding Variational Autoencoders (VAEs) - Towards Data Science

Inference¶

random generate latent representation \(z\) that follows sample distribution \(log q_\phi(z|x^{(i)})=log N(z;μ^{(i)},σ^{2(i)}I)\), then use decoder to generate output

CVAE¶

Conditional VAE Learning Structured Output Representation using Deep Conditional Generative Models (NIPS 2015)

VAE-GAN¶

Autoencoding beyond pixels using a learned similarity metric (ICML 2016)

- VAE+GAN

- replace elemeent-wise reconstruction loss by some layer in discrimator

VQ-VAE¶

Neural Discrete Representation Learning (NIPS 2017) - DeepMind

VQ-VAE stands for Vector Quantised Variational AutoEncoder

- the encoder network outputs discrete, rather than continuous, codes, learning prior via codebook loss $$

- using autoregressive model as encoder. PixelCNN for image, WaveNet for audio

Interpolation of VQ-VAE¶

Based on the idea of VQ-VAE that it enforce latent representation more close to the datasets (discrete), it is expected that it’s weaker at interpolation to generate unseen samples.

On the lines task, we found that this (VQ-VAE) procedure produced poor interpolations.

–Paper of [ACAI]

Application¶

ACAI¶

Understanding and Improving Interpolation in Autoencoders via an Adversarial Regularizer (ICLR 2019) | OpenReview

ACAI stands for Adversarially Constrained Autoencoder Interpolation

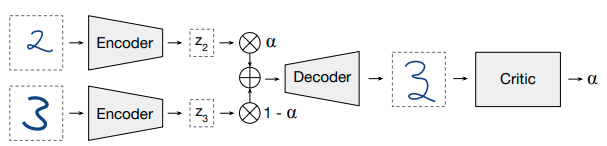

A critic network is fed interpolants and reconstructions and tries to predict the interpolation coefficient α corresponding to its input (with α = 0 for reconstructions). The autoencoder is trained to fool the critic into outputting α = 0 for interpolants.

ALAE¶

Adversarial Latent Autoencoders (CVPR 2020)

PyTorch

StyleGAN + latent space reconstruction via VAE (is the concept a bit like UNIT in single domain & loss based on latent space ?)