Conditional / Repersentation of GAN¶

Apply condition, interactive/editing

Representation Learning¶

Usage:

- Manipulate output of generative models

- Transfer learning, embedding feature vector for other task (e.g. faceNet for face recognition, word2vec for NLP, VGG loss)

Latent Space¶

Latent Space of encoder

Manipulation Process¶

input: unlabelled dataset to train encoder

- Train encoder-decoder(e.g. AutoEncoder) with unlabelled dataset

- Obtain average encoding of positive and negative inputs from labelled dataset

- Get manipulation vector by taking difference

- Manipulate new x_input along z_manipulate

Conditional GAN¶

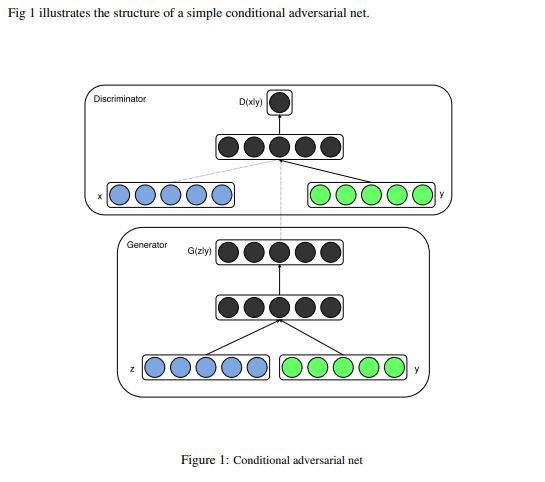

Conditional Generative Adversarial Nets (CoRR 2014) by Mehdi Mirza, Simon Osindero

Apply GAN by learning conditions (supervised)

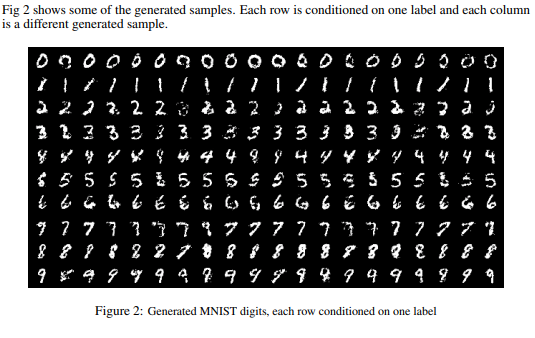

Applications shown in paper: genetarte MNIST digits (from noise+condition that specified digit number) & image tagging

Image-to-image translation model like pix2pix could be considiered conditional GAN, with image input as condition.

InfoGAN¶

InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets (NIPS 2016) Apply GAN with learning conditions

iGAN¶

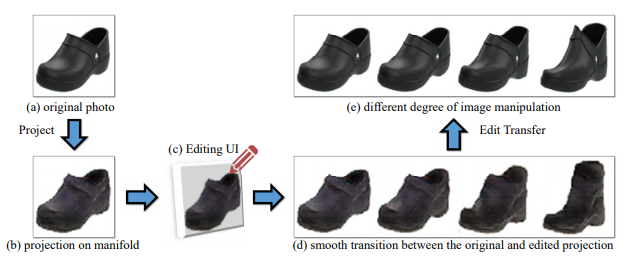

Generative Visual Manipulation on the Natural Image Manifold (ECCV 2016) - Adobe

Theano

learn the natural image manifold directly from data using a generative adversarial neural network. We then define a class of image editing operations, and constrain their output to lie on that learned manifold at all times.

architecture based on DCGAN

Manipulating the Latent Vector¶

Each editing operation is formulated as a constraint \(f_g(x)=v_g\), g include color, shape and warping constraints

given an initial projection \(x_0\), find a new image x close to \(x_0\) trying to satisfy as many constraint as possible via Gradient descent update (just like style-transfer, training 1 model for specified loss)

Applications¶

- Manipulating an existing photo based on an underlying generative model to achieve a different look (shape and color);

- “Generative transformation” of one image to look more like another;

- Generate a new image from scratch based on user’s scribbles and warping UI. feature mapping, mini-batch discrimination

Projection Discriminator¶

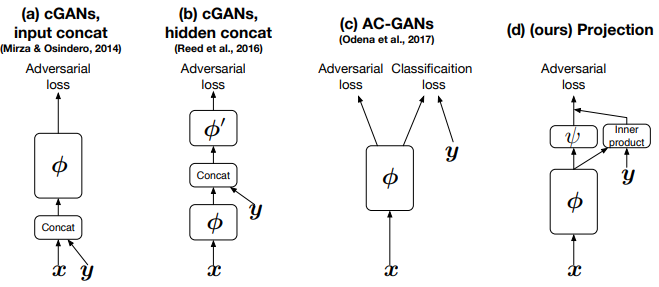

cGANS with Projection Discriminator (ICLR 2018)

incorporate the conditional information into the discriminator in projection based way to improve quality of the class conditional image generation.

code

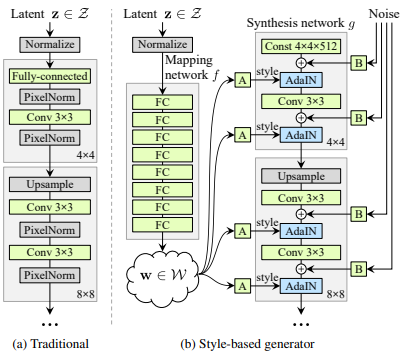

StyleGAN¶

A Style-Based Generator Architecture for Generative Adversarial Networks (CVPR 2019) by Nvidia

links

The generator starts from a learned constant input and adjusts the “style” of the image at each convolution layer based on the latent code, therefore directly controlling the strength of image features at different scales.

StyleALAE¶

Model of antoencoder/ALAE

apply VAE on StyleGAN to enable manipulations based on real images, while previous generative GAN manipulate with generated images only.